|

I am a fourth year PhD student in Wuhan University, co-supervised by Prof. Gui-Song Xia and Nan Xue. |

|

|

2025-05 The paper Sat2Density++ is released. 2024-04 Two papers are accepted by CVPR 2024. 2023-07 Sat2Density is accepted by ICCV 2023. 2020-08 BGGAN is accepted by ECCVW 2020. 2020-07 We win Winner Award at the ECCV AIM 2020 Challenge on Rendering Realistic Bokeh with Congyu and Jiamin. |

|

I'm interested in 3D vision and image processing. Much of my research is about inferring the physical world and camera (shape, motion, color, light, bokeh, etc) from images. |

|

Ming Qian, Bin Tan, Qiuyu Wang, Xianwei Zheng, Hanjiang Xiong, Gui-Song Xia, Yujun Shen, Nan Xue Arxiv, 2025 project page / arXiv The journal extension of Sat2Density, significantly improves the consistency and quality of generated street-view videos and images. |

|

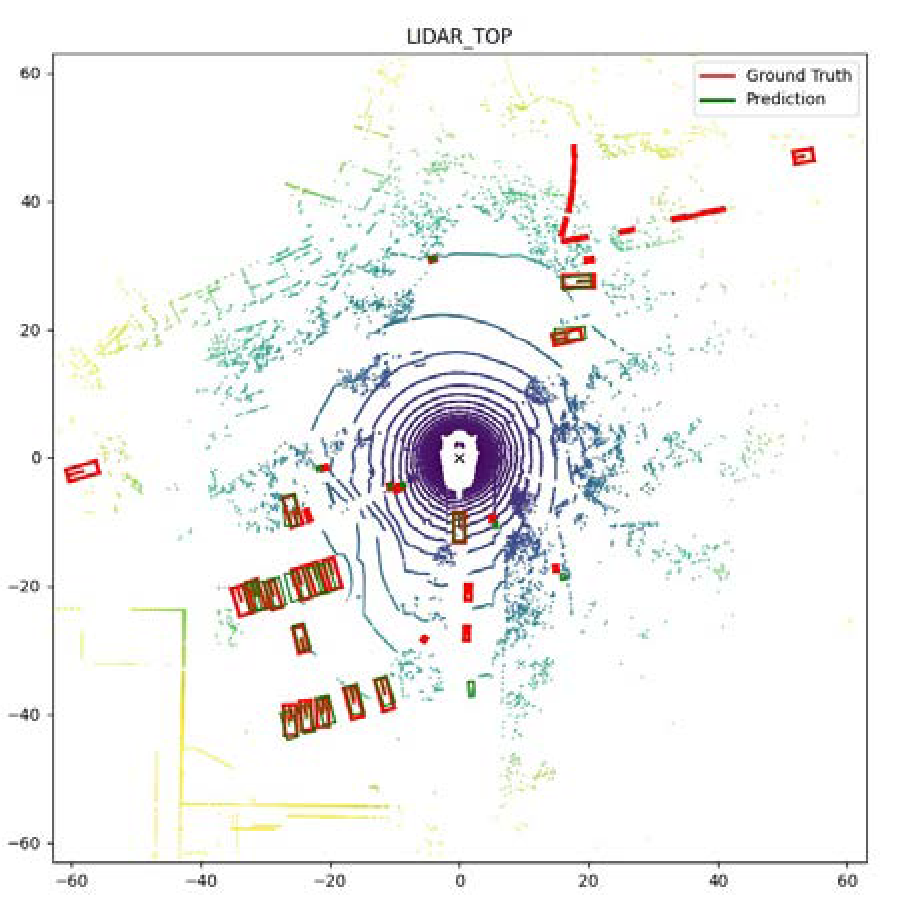

Xianpeng Liu, Ce Zheng, Ming Qian, Nan Xue, Chen Chen, Zhebin Zhang, Chen Li, Tianfu Wu CVPR, 2024 arXiv |

|

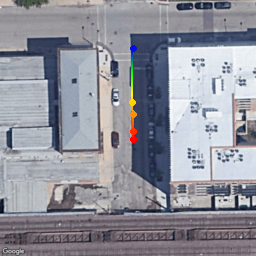

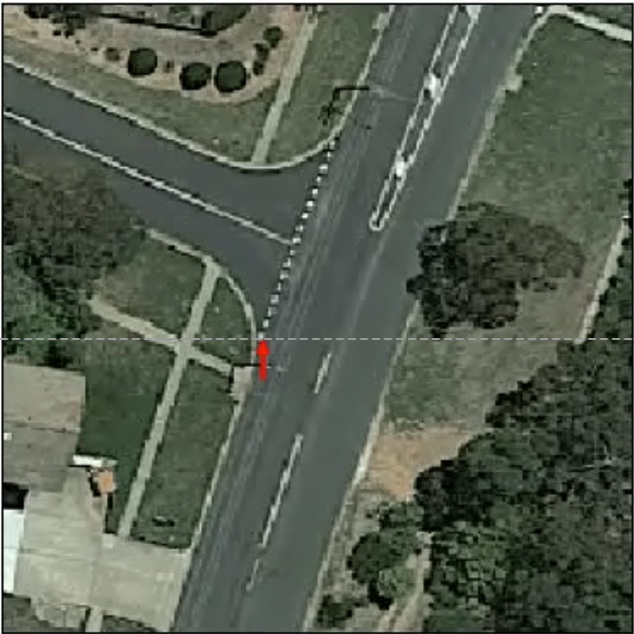

Ming Qian, Jincheng Xiong, Gui-Song Xia, Nan Xue, ICCV, 2023 project page / code / arXiv Sat2Density focuses on the geometric nature of generating high-quality ground street videos conditioned on satellite images learning from collections of satellite-ground image pairs. |

|

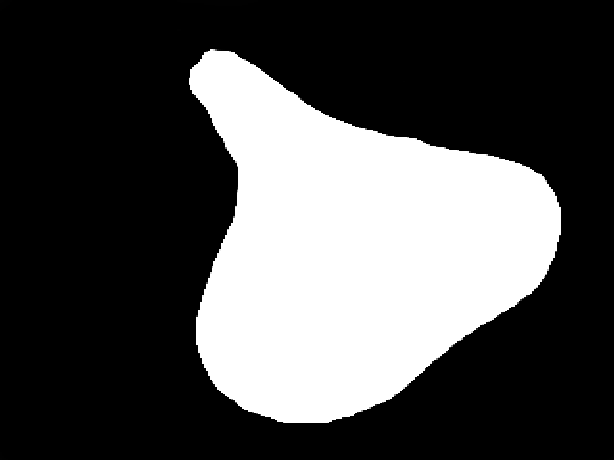

Yuxin Jin*, Ming Qian*, Jincheng Xiong, Nan Xue, Gui-Song Xia ICME, 2023 arXiv / Code / Paper D-DFFNet considers the physical mechanism of defocus blur and successfully distinguishes homogeneous regions. In addition, we propose a larger benchmark EBD that includes more DOF cases. The results of detection on multiple public test sets look great. |

|

Ming Qian, Congyu Qiao, Jiamin Lin, Zhenyu Guo, Chenghua Li, Cong Leng, Jian Cheng ECCVW, 2020 arXiv / Code BGGAN lets us synthesis bokeh effect images from bokeh-less images end to end. Rank 1st in eccv AIM 2020 challenge . |

|

Design and source code from Jon Barron's website |